This tutorial is about z-standardization (z-transformation). We will discuss what the z-score is, how z-standardization works, and what the standard normal distribution is. In addition, the z-score table is discussed and what it's used for.

What is z-standardization?

Z-standardization is a statistical procedure used to make data points from different datasets comparable. In this procedure, each data point is converted into a z-score. A z-score indicates how many standard deviations a data point is from the mean of the dataset.

Example of z-standardization

Suppose you are a doctor and want to examine the blood pressure of your patients. For this purpose, you measured the blood pressure of a sample of 40 patients. From the measured data, you can now naturally calculate the average, i.e., the value that the 40 patients have on average.

Now one of the patients asks you how high his blood pressure is compared to the others. You tell him that his blood pressure is 10mmHg above average. Now the question arises, whether 10mmHg is a lot or a little.

If the other patients cluster very closely around the mean, then 10mmHg is a lot in relation to the spread, but if the other patients spread very widely around the mean, then 10mmHg might not be that much.

The standard deviation tells us how much the data is spread out. If the data are close to the mean, we have a small standard deviation; if they are widely spread, we have a large standard deviation.

Let's say we get a standard deviation of 20 mmHg for our data. This means that on average, the patients deviate by 20 from the mean.

The z-score now tells us how far a person is from the mean in units of standard deviation. So, a person who deviates one standard deviation from the mean has a z-score of 1. A person who is twice as far from the mean has a z-score of 2. And a person who is three standard deviations from the mean has a z-score of 3.

Accordingly, a person who deviates by minus one standard deviation has a z-score of -1, a person who deviates by minus two standard deviations has a z-score of -2, and a person who deviates by minus three standard deviations has a z-score of -3.

And if a person has exactly the value of the mean, then they deviate by zero standard deviations from the mean and receive a score of zero.

Thus, the z-score indicates how many standard deviations a measurement is from the mean. As mentioned, the standard deviation is just a measure of the dispersion of the patients' blood pressure around the mean.

In short, the z-score helps us understand how exceptional or normal a particular measurement is compared to the overall average.

Calculating the z-score

How do we calculate the z-score? We want to convert the original data, in our case the blood pressure, into z-scores, i.e., perform a z-standradiszation.

Here we see the formula for z-standardization. Here, z is of course the z-value we want to calculate, x is the observed value, in our case the blood pressure of the person in question, μ is the mean value of the sample, in our case the mean value of all 40 patients, and σ is the standard deviation of the sample, i.e. the standard deviation of our 40 patients.

Caution: μ and σ are actually the mean and standard deviation of the population, but in our case we only have a sample. However, under certain conditions, which we will discuss later, we can estimate the mean and standard deviation using the sample.

Let's assume that the 40 patients in our example have a mean value of 130 and a standard deviation of 20. If we use both values, we get for z: x-130 divided by 20

Now we can use the blood pressure of each individual patient for x and calculate the z value. Let's just do this for the first patient. Let's say this patient has a blood pressure of 97, then we simply enter 97 for x and get a z-value of -1.65.

This person therefore deviates from the mean by -1.65 standard deviations. We can now do this for all patients.

Regardless of the unit of the initial data, we now have an overview in which we can see how far a person deviates from the mean in units of the standard deviation.

Now, of course, we only have a sample that comes from a specific population. But if the data is normally distributed and the sample size is greater than 30, then we can use the z-value to say what percentage of patients have a blood pressure lower than 110, for example, and what percentage have a blood pressure higher than 110.

But how does this work? If the initial data is normally distributed, we obtain a so-called standard normal distribution through z-standardization.

The standard normal distribution is a specific type of normal distribution with a mean value of 0 and a standard deviation of 1.

The special feature is that any normal distribution, regardless of its mean or standard deviation, can be converted into a standard normal distribution.

Since we now have a standardized distribution, all we really need is a table that tells us what percentage of the values are below this value for as many z-values as possible .

And you can find such a table in almost every statistics book or here: Table of the z-distribution. Now, of course, the question is how to read this table?

If, for example, we have a z-value of -2, then we can read a value of 0.0228 from this table.

This means that 2.28% of the values are smaller than a z-value of -2. As the sum is always 100% or 1, 97.72% of the values are greater.

And with a z-value of zero, we are exactly in the middle and get a value of 0.5. Therefore 50% of the values are smaller than a z-value of 0 and 50% of the values are greater than 0. As the normal distribution is symmetrical, we can read off the probabilities for positive z-values exactly.

If we have a z-value of 1, we only need to search for -1. However, we must note that in this case we get a value that tells us what percentage of the values are greater than the z-value. So with a z-value of 1, 15.81% of the values are larger and 84.14% of the values are smaller.

But what if, for example, we want to read a z-value of -1.81 in the table? We need the other columns for this. We can read a z-value of -1.81 at -1.8 and at 0.01.

Now let's look at the example about blood pressure again. For example, if we want to know what percentage of patients have a blood pressure below 123, we can use z-standardization to convert a blood pressure of 123 into a z-value, in this case we get a z-value of -0.35.

Now we can take the table with the z-distributions and search for a z-value of -0.35. Here we have a value of 0.3632. This means that 36.32 percent of the values are smaller than a z-value of -0.35 and 63.68 percent are larger.

Compare different data sets with the z-score

However, there is another important application for z-standardization. The z-standardization can help to make values measured in different ways comparable. Here is an example.

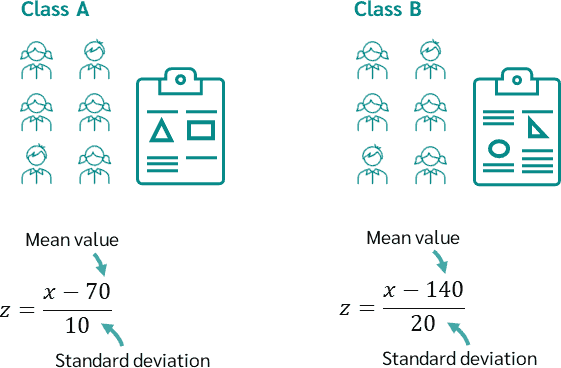

Suppose we have two classes, class A and class B, who have written a different test in mathematics.

The tests are designed differently, have a different level of difficulty and a different maximum score.

In order to be able to compare the performance of the pupils in the two classes fairly, we can apply the z-standardization.

The average score or mean score for class A was 70 points with a standard deviation of 10 points. The average score for the test in class B was 140 points with a standard deviation of 20 points.

We now want to compare the performance of Max from class A, who scored 80 points, with the performance of Emma from class B, who scored 160 points.

To do this, we simply calculate the z-value of Max and Emma. We enter 80 once for x and get a z-value of 1. Then we enter 160 for x and also get a z-value of 1.

The z-values of Max and Emma are therefore the same. This means that both students performed equally well in terms of average performance and dispersion in their respective classes. Both are exactly one standard deviation above the mean of their class.

Assumptions

But what about the assumptions? Can we simply calculate a z-standardization and use the table of the standard normal distribution?

The z-standardization itself, i.e. the conversion of the data points into z-values using this formula, is essentially not subject to any strict conditions. It can be carried out independently of the data distribution.

However, if we use the resulting z-values in the context of the standard normal distribution for statistical analyses (e.g. for hypothesis tests or confidence intervals), certain assumptions must be met.

The z-distribution assumes that the underlying population is normally distributed and that the mean (μ) and standard deviation (σ) of the population are known.

However, as you never have the entire population in practice and the mean value and standard deviation are usually not known, this requirement is of course often not met. Fortunately, however, there is an alternative assumption.

Although the z-distribution is defined for normally distributed populations, the central limit theorem can be applied to large samples. This theorem states that the distribution of the sample approaches a normal distribution if the sample size is greater than 30. Therefore, if the sample is larger than 30, the standard normal distribution can be used as an approximation and the mean and standard deviation can be estimated using the sample.

When the standard deviation is estimated from the sample, s is usually written instead of σ and x dash instead of mu for the mean.

The z-standardization should not be confused with the z-test or the t-test. If you want to know what the t-test is, please watch the following video.